API rate limits

What is Rate Limiting

Rate Limiting (also known as “throttling”) is the process by which we intentionally limit the number of API requests that can be processed at any given time. By instituting rate limits, we can ensure the overall stability of the system, while also making sure that the API is protected from abuse.

In order to accommodate rapid scale and growth (add more content here) We aim to offer scalable and reliable interfaces, as such we have developed tokens with higher rate limits so merchants can scale seamlessly and do not need to cycle through tokens. For more information about how you can avoid hitting rate limits, refer to the “How Do I Avoid Triggering Rate Limits?” section of this document.

How Does Rate Limiting Work?

When Recharge receives an API request, we process the request and then proceed to the next request in the queue. For every API token, there is a queue which is used to process the request.

To enable the continuous processing of requests, we follow the “leaky bucket'' algorithm. This algorithm stores these requests until the server is able to process them.

"Leaky Bucket" Example

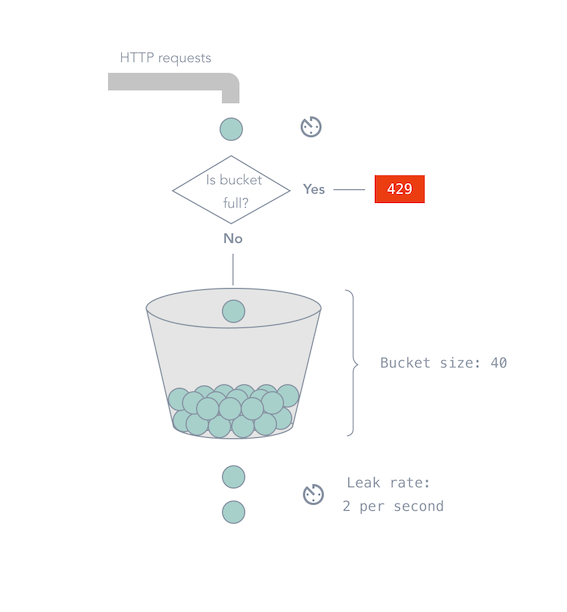

The API call limit operates using a “leaky bucket” algorithm as a controller, allowing for infrequent bursts of calls while enabling your app to continue making unlimited calls over time. This algorithm is similar to a bucket designed to hold water. Each incoming request represents another drop in the bucket. As the bucket continues to fill up with new requests, the bucket “leaks” requests at a constant rate to make room for new requests. If the “leaky bucket'' becomes full, no additional requests will be processed, and a 429 error code will be returned.

For Recharge, the bucket size is 40 calls (which cannot be exceeded at any given time), with a “leak rate” of 2 calls per second that continually empties the bucket. If your app averages 2 calls per second, it will never trip a 429 error (“bucket overflow”).

- If you make 2 requests per second * 60 seconds = 120 requests per minute.

- If you make a burst of 39 API requests out of 40 requests (39/40); you will be left with only 1 request left. With each second that you do not make a request, the number of available requests will increase by 2 requests. Therefore, if you make 39/40 requests and then wait 10 seconds, you will be at 19/40. That being said, you can do 40 requests bursts at once and after every 10 seconds, you do 20 more requests. By doing that you are available to do 160 requests per minute if you perfectly time everything.

An example of what the "leaky bucket" algorithm looks like conceptually is shown below.

Why Leaky Bucket?

Other types of rate limitation exist, such as fixed window or sliding window. We have chosen leaky bucket due to its widespread adoption, adaptability to burst calls (which occur often in eCommerce) and efficient use of resources.

The 429 Error Code

If you reach your assigned rate limit, you will see the following error code:

“Error 429 - Too many requests”

Receiving this error means that you need to take remediation steps to ensure Recharge can process subsequent requests.

For more detailed information about this error, refer to the following link below.

How Do I Avoid Triggering Rate Limits?

As a merchant, there are several ways you can avoid triggering rate limits and receiving rate limit errors when making requests. Understanding our standard rate limit is the easiest way to make sure you can continue making API requests without interruptions.

The Standard Recharge Rate Limit

We have implemented a token-based process for handling API requests. Each merchant is granted the following standard (1x1 multiplier) rate limit:

-

2 requests per second leak rate

-

40 request bucket size

Leak Rate

“leak rate” refers to the number of requests that will be processed by the server at the same time, while “bucket size” refers to the number of requests that can be stored in the bucket at any one time.

Since you will most likely have multiple tokens for API requests, this should be sufficient for your normal day-to-day operations; however, there may come a time when you find you are reaching your rate limit more frequently due to increased scale and growth. If this occurs, there are several remediation steps you can take to resolve this issue.

- Distribute your API requests across several tokens.

- Review your application design to ensure it is optimized to work with Recharge.

- Request a Rate Limit increase.

Distributing API Requests Across Multiple Tokens

One way to minimize the possibility of receiving a 429 error when making API calls is to distribute calls across multiple tokens. By using an array of tokens, and then cycling through them as you are making calls, you can perform a type of “load balancing” across your application, enabling you to limit the number of API requests made per token.

For example, if you have 3 tokens, you may wish to proceed as follows:

- API call 1 ⇒ Token 1

- API call 2 ⇒ Token 2

- API call 3 ⇒ Token 3

- API call 4 ⇒ Token 1

- API call 5 ⇒ Token 2

As you can see from the above example, using three tokens can help you spread out your API requests, thereby helping you avoid reaching your rate limit.

Evaluating Your Application Design to Work with Recharge

In order to work more efficiently with us, there are a number of best practices you should adopt in your application design. By following these recommendations, you can optimize your integration with us and minimize the possibility of reaching your rate limit. Some of these recommendations include:

- Only make necessary calls and try to eliminate redundant calls.

- Use the API’s Extension functionality so you optimize your calls when fetching data.

- When working on backend asynchronous processes, make use of the Async Batch Endpoints.

- Handle your 429s gracefully. Where possible, if you get a 429 error, sleep for at least 2 seconds and then retry your request.

- Sleep where / when you can - this is particularly relevant to non time-sensitive backend processes. When processing a large number of calls for a backend process, you can easily implement regular sleep() intervals to buffer the calls and avoid ever hitting the rate limit.

Requesting a Rate Limit Increase

If you still find you are triggering 429 errors and reaching your rate limit, a rate limit increase may be needed to ensure your application can continue making API requests. Depending on which plan you are on (Standard or PRO), the steps to increase your rate limit are the same:

- If you are on a Standard plan, reach out to [Recharge Support] (https://support.rechargepayments.com/hc/en-us/requests/new#) to discuss increasing your rate limit.

- If you are on the PRO plan, reach out to [Recharge Support] (https://support.rechargepayments.com/hc/en-us/requests/new#) to discuss increasing your rate limit.

Our Support team will evaluate your needs and provide you with recommendations and strategies that can help mitigate errors and avoid reaching your rate limits. If we determine that you need to have your rate limit increased, we will work with you on increasing your rate limit.

Additional Resources

If you would like to learn more about how to use the Recharge API, please refer to the following documentation:

Updated 5 months ago